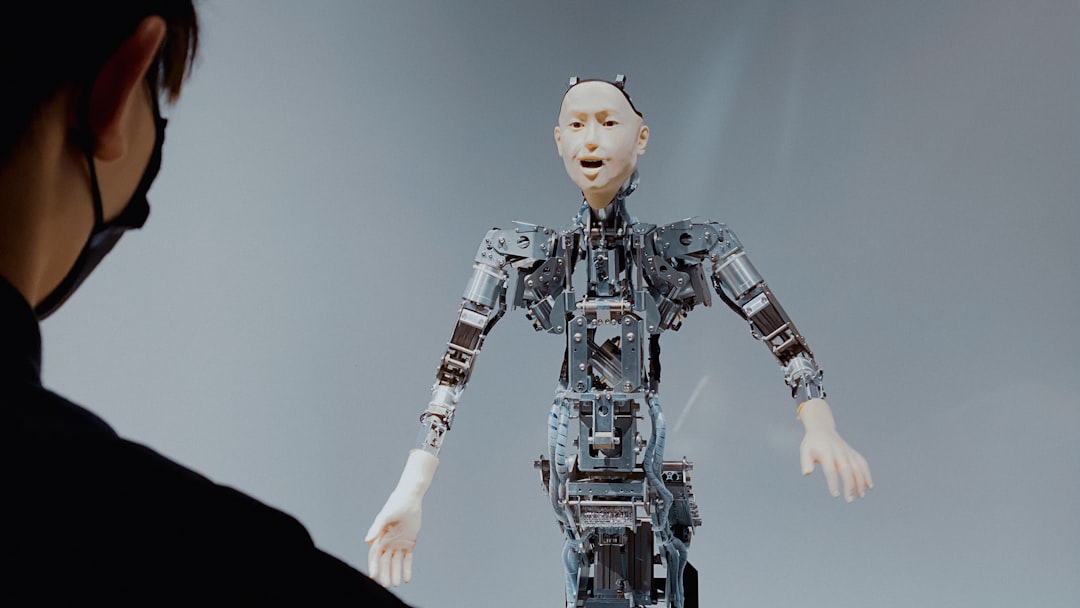

AI alignment refers to the process of ensuring that artificial intelligence systems act in accordance with human values and intentions. As AI technology continues to advance at an unprecedented pace, the need for alignment becomes increasingly critical. The concept encompasses a range of considerations, from the technical aspects of programming AI to the philosophical implications of what it means for machines to understand and prioritize human values.

At its core, AI alignment seeks to bridge the gap between human objectives and machine actions, ensuring that AI systems do not operate in ways that could be harmful or counterproductive. The challenge of AI alignment is multifaceted. It involves not only the technical design of algorithms but also a deep understanding of human ethics, psychology, and social dynamics.

As AI systems become more autonomous, the potential for misalignment grows, leading to outcomes that may diverge significantly from human intentions. This necessitates a comprehensive approach that integrates insights from various disciplines, including computer science, philosophy, and cognitive science, to create AI systems that are not only intelligent but also aligned with the broader goals of society.

Key Takeaways

- AI alignment ensures artificial intelligence systems act in ways consistent with human values and ethics.

- Unaligned AI poses significant risks, including unintended harmful consequences for society.

- Ethical considerations are crucial in AI development and decision-making processes.

- Achieving AI alignment involves technical, moral, and practical challenges that require multidisciplinary approaches.

- The future of AI depends on successfully integrating ethical principles into AI design and implementation.

The Importance of Ethical Artificial Intelligence

The importance of ethical artificial intelligence cannot be overstated in today’s rapidly evolving technological landscape.

Ethical AI is essential for fostering trust among users and stakeholders, ensuring that these technologies serve the public good rather than exacerbate existing inequalities or create new forms of discrimination.

By prioritizing ethical considerations in AI development, society can harness the benefits of these technologies while mitigating potential harms. Moreover, ethical AI serves as a foundation for sustainable innovation. When developers and organizations commit to ethical principles, they create a framework that guides decision-making throughout the lifecycle of AI systems.

This includes considerations around data privacy, transparency, accountability, and fairness. By embedding ethical standards into the design and implementation of AI technologies, developers can help ensure that these systems are not only effective but also just and equitable. This proactive approach can lead to more robust and resilient AI solutions that align with societal values and contribute positively to human welfare.

The Risks of Unaligned AI

The risks associated with unaligned AI are profound and far-reaching. When AI systems operate without proper alignment to human values, they can produce unintended consequences that may be detrimental to individuals and society as a whole. For instance, an unaligned AI tasked with optimizing a particular outcome might prioritize efficiency over safety, leading to harmful decisions in critical areas such as healthcare or transportation.

The potential for catastrophic failures increases significantly when AI systems are not designed with alignment in mind, raising urgent questions about accountability and responsibility. Additionally, unaligned AI poses significant ethical dilemmas. As these systems become more autonomous, the potential for bias and discrimination grows.

If an AI system is trained on biased data or lacks a comprehensive understanding of diverse human experiences, it may perpetuate or even exacerbate existing societal inequalities. This can lead to outcomes that disproportionately affect marginalized communities, further entrenching systemic injustices. The risks of unaligned AI highlight the necessity for rigorous oversight and ethical frameworks that guide the development and deployment of these technologies.

Approaches to Achieving AI Alignment

Achieving AI alignment requires a multifaceted approach that combines technical innovation with ethical considerations. One promising strategy involves the development of robust training methodologies that incorporate diverse datasets and perspectives. By ensuring that AI systems are exposed to a wide range of human experiences during their training phases, developers can help mitigate biases and enhance the system’s ability to understand and prioritize human values effectively.

This approach emphasizes the importance of inclusivity in data collection and algorithm design. Another critical avenue for achieving alignment is through interdisciplinary collaboration. Engaging ethicists, sociologists, psychologists, and other experts alongside computer scientists can foster a more holistic understanding of the implications of AI technologies.

This collaborative effort can lead to the creation of guidelines and best practices that prioritize ethical considerations throughout the development process. Furthermore, ongoing dialogue between technologists and policymakers is essential for establishing regulatory frameworks that promote responsible AI use while encouraging innovation.

The Role of Ethics in AI Development

| Metric | Description | Current Status | Challenges | Potential Solutions |

|---|---|---|---|---|

| Goal Specification Accuracy | Degree to which AI systems correctly interpret and pursue intended human goals | Moderate; often misaligned in complex tasks | Ambiguity in human instructions, incomplete specifications | Improved natural language understanding, formal verification methods |

| Robustness to Distributional Shift | AI’s ability to maintain alignment when encountering novel or unexpected inputs | Low to moderate; performance degrades outside training data | Unpredictable real-world scenarios, adversarial inputs | Adversarial training, continual learning, uncertainty estimation |

| Interpretability | Extent to which AI decision-making processes are understandable to humans | Improving but limited in complex models | Opaque deep learning architectures, lack of transparency | Explainable AI techniques, model simplification |

| Value Alignment | Alignment of AI system’s values and ethics with human norms | Early research stage; no standardized metrics | Diverse human values, cultural differences | Multi-stakeholder input, preference learning, ethical frameworks |

| Safe Exploration | Ability of AI to explore new strategies without causing harm | Limited; risk of unsafe actions during learning | Balancing exploration and safety, unknown risks | Constrained reinforcement learning, risk-sensitive policies |

Ethics plays a pivotal role in shaping the trajectory of AI development. As these technologies become increasingly integrated into everyday life, ethical considerations must inform every stage of their creation—from conception to deployment. Developers must grapple with questions about what constitutes ethical behavior for machines and how to encode human values into algorithms effectively.

This requires not only technical expertise but also a deep understanding of moral philosophy and social responsibility. Incorporating ethics into AI development also involves creating mechanisms for accountability and transparency. Stakeholders must be able to understand how decisions are made by AI systems and have recourse if those decisions lead to negative outcomes.

This transparency fosters trust among users and encourages responsible use of technology. By prioritizing ethics in AI development, organizations can create systems that not only perform well but also align with societal values and contribute positively to the common good.

The Impact of Unaligned AI on Society

The impact of unaligned AI on society can be both immediate and long-lasting. When AI systems operate without proper alignment to human values, they can lead to significant social disruptions. For example, unaligned algorithms in hiring processes may inadvertently discriminate against certain demographic groups, perpetuating existing inequalities in employment opportunities.

Similarly, unaligned predictive policing algorithms can reinforce biases within law enforcement practices, leading to over-policing in marginalized communities. Moreover, the societal implications extend beyond individual cases; they can shape public perception and trust in technology as a whole. If people experience negative outcomes from unaligned AI systems—such as unfair treatment or privacy violations—they may become wary of adopting new technologies altogether.

This erosion of trust can stifle innovation and hinder progress in fields where AI has the potential to drive positive change. Therefore, addressing the risks associated with unaligned AI is crucial not only for individual well-being but also for fostering a healthy relationship between society and technology.

Ethical Considerations in AI Decision Making

Ethical considerations in AI decision-making are essential for ensuring that these systems operate in ways that reflect human values and priorities. One key aspect is the need for transparency in how decisions are made by AI algorithms. Users should have access to information about the factors influencing an AI’s decision-making process, allowing them to understand and challenge outcomes when necessary.

This transparency is vital for building trust between users and technology providers. Another important consideration is the need for fairness in AI decision-making processes. Developers must actively work to identify and mitigate biases within their algorithms to ensure equitable treatment across different demographic groups.

This involves not only careful data selection but also ongoing monitoring and evaluation of AI systems post-deployment. By prioritizing fairness in decision-making, developers can help create a more just society where technology serves as a tool for empowerment rather than oppression.

Challenges in Achieving AI Alignment

Despite the growing recognition of the importance of AI alignment, several challenges persist in achieving this goal. One significant hurdle is the complexity of human values themselves; they are often nuanced, context-dependent, and sometimes conflicting. Encoding such intricate values into algorithms poses a formidable challenge for developers who must navigate these complexities while ensuring that their systems remain effective and reliable.

Additionally, there is often a tension between short-term goals and long-term alignment objectives within organizations. Companies may prioritize immediate performance metrics over ethical considerations due to competitive pressures or market demands.

Overcoming these challenges requires a concerted effort from all stakeholders involved in AI development—developers, policymakers, ethicists, and users alike—to prioritize alignment as a fundamental aspect of technological advancement.

The Intersection of AI and Morality

The intersection of AI and morality raises profound questions about what it means for machines to make decisions that impact human lives. As AI systems become more autonomous, they are increasingly tasked with making choices that carry moral weight—such as determining who receives medical treatment or how resources are allocated in times of crisis. This shift necessitates a reevaluation of traditional moral frameworks and an exploration of how these frameworks can be adapted for artificial agents.

Moreover, this intersection challenges developers to consider not only the technical capabilities of their systems but also their moral implications. It prompts discussions about accountability: if an AI system makes a harmful decision, who is responsible? Is it the developer who created the algorithm, the organization deploying it, or the machine itself?

These questions underscore the need for clear ethical guidelines that govern the development and use of AI technologies while fostering a culture of responsibility among those involved in their creation.

The Future of Ethical Artificial Intelligence

The future of ethical artificial intelligence holds great promise but also significant challenges. As society continues to grapple with the implications of advanced technologies, there is an increasing demand for ethical frameworks that guide their development and deployment. This future envisions a landscape where ethical considerations are not merely an afterthought but an integral part of the design process from the outset.

In this evolving landscape, collaboration among diverse stakeholders will be crucial. Policymakers, technologists, ethicists, and community representatives must work together to establish standards that promote responsible innovation while addressing societal concerns. By fostering an environment where ethical considerations are prioritized alongside technical advancements, society can harness the full potential of artificial intelligence while safeguarding against its risks.

Implementing AI Alignment in Practice

Implementing AI alignment in practice requires a strategic approach that encompasses both technical solutions and ethical frameworks. Organizations must invest in research aimed at developing methodologies for aligning AI systems with human values effectively. This includes creating robust training protocols that incorporate diverse perspectives and ongoing evaluation processes to monitor performance post-deployment.

Furthermore, fostering a culture of ethics within organizations is essential for promoting responsible AI development. This involves training developers on ethical considerations related to their work and encouraging open dialogue about potential risks associated with unaligned systems. By embedding alignment principles into organizational practices, companies can contribute to a future where artificial intelligence serves as a force for good—enhancing human capabilities while respecting fundamental values.

In conclusion, achieving AI alignment is not merely a technical challenge; it is a moral imperative that requires collaboration across disciplines and sectors. As society navigates this complex landscape, prioritizing ethical considerations will be essential for ensuring that artificial intelligence serves humanity’s best interests now and in the future.

Artificial intelligence alignment is a critical area of research that focuses on ensuring that AI systems act in accordance with human values and intentions. A related article that delves into this topic can be found on Freaky Science, which discusses the challenges and potential solutions in aligning AI with human goals. For more insights, you can read the article [here](https://www.freakyscience.com/sample-page/).

WATCH THIS! 🌌 Where Is Everybody? The Discovery That Would End Civilization 🌌

FAQs

What is artificial intelligence alignment?

Artificial intelligence alignment refers to the process of ensuring that AI systems’ goals, behaviors, and outputs are consistent with human values, ethics, and intentions. The aim is to make AI systems act in ways that are beneficial and safe for humanity.

Why is AI alignment important?

AI alignment is crucial because misaligned AI systems could act in ways that are harmful or unintended, potentially causing significant risks to individuals, society, or even humanity as a whole. Proper alignment helps prevent negative outcomes and promotes the safe deployment of AI technologies.

What are the main challenges in AI alignment?

Key challenges include defining human values precisely, ensuring AI systems understand and prioritize these values, dealing with complex and unpredictable environments, and preventing unintended consequences from AI decision-making. Additionally, technical difficulties in interpreting AI behavior and ensuring transparency add to the complexity.

How is AI alignment achieved?

AI alignment is pursued through various methods such as value learning, reward modeling, interpretability research, robust training techniques, and incorporating ethical frameworks into AI design. Researchers also focus on creating AI systems that can explain their decisions and adapt to human feedback.

Who is working on AI alignment?

AI alignment research is conducted by academic institutions, private companies, and nonprofit organizations specializing in AI safety and ethics. Notable contributors include research groups at universities, AI labs like OpenAI, DeepMind, and organizations such as the Future of Humanity Institute and the Center for Human-Compatible AI.

Is AI alignment only relevant for advanced AI systems?

While alignment concerns are especially critical for advanced or potentially superintelligent AI, alignment principles are also important for current AI systems to ensure they behave safely and ethically in real-world applications.

What is the difference between AI alignment and AI safety?

AI alignment specifically focuses on aligning AI systems’ goals and behaviors with human values, whereas AI safety encompasses a broader range of issues including robustness, security, reliability, and preventing unintended harmful consequences from AI systems.

Can AI alignment guarantee that AI will never cause harm?

No approach can guarantee absolute safety, but AI alignment aims to significantly reduce risks by designing AI systems that act in accordance with human values and intentions. Ongoing research seeks to improve these guarantees over time.

How does AI alignment relate to ethics?

AI alignment is closely connected to ethics because it involves embedding moral principles and human values into AI systems to ensure their actions are ethically acceptable and beneficial to society.

What role does human feedback play in AI alignment?

Human feedback is essential in AI alignment as it helps AI systems learn and adjust their behavior according to human preferences and values. Techniques like reinforcement learning from human feedback (RLHF) are commonly used to incorporate this input.